Why We Stopped Relying on Proprietary LLMs

The Fast Path (That Almost Worked)

When we started building HelpMyTaxes.jp, our goal was simple: make a complex, stressful process easier for users using AI.

Like many early-stage builders, we reached for proprietary LLM APIs. They were:

- Easy to integrate

- Well-documented

- Good enough to get something working quickly

And to be fair—they did work. We shipped faster than we ever could have otherwise.

But as the product matured and real users started trusting the platform with sensitive financial information, cracks began to show.

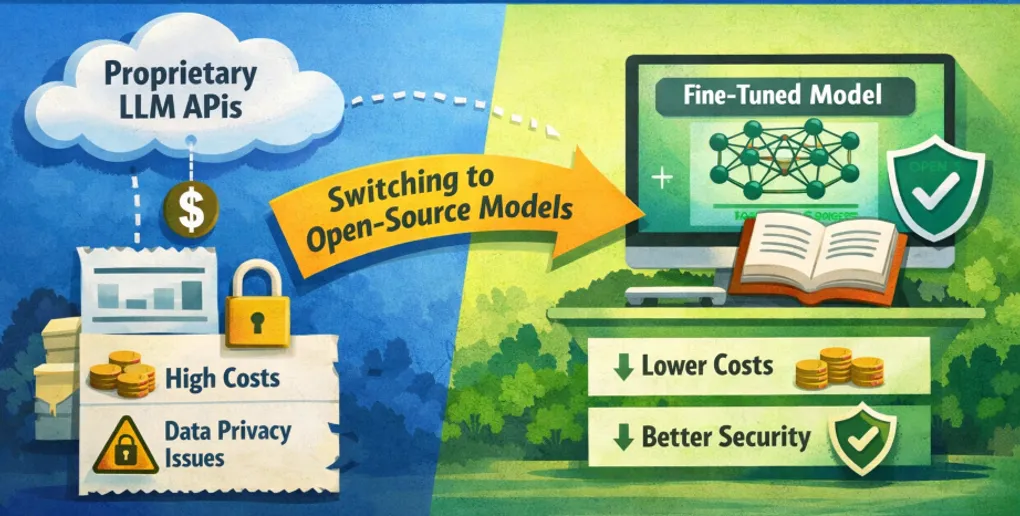

The Two Problems We Couldn’t Ignore

1. Cost Didn’t Scale with Usage

At small traffic levels, API costs felt manageable. But once we started modeling real usage patterns, the math became uncomfortable:

- Long conversations

- Repeated prompts for validation and correction

- Increasing expectations of accuracy

Each improvement meant more tokens. More tokens meant higher, unpredictable costs.

For a product handling tax-related workflows, that kind of cost volatility is dangerous.

2. User Data and Trust

The bigger issue was data privacy.

Even with strong contractual guarantees, sending sensitive user data to third-party models introduces risk:

- Regulatory uncertainty

- Limited control over data retention

- A trust gap with users who expect confidentiality

At some point, we had to ask ourselves:

If this were our financial data, would we be comfortable with this setup?

The honest answer was not entirely.

Why Fine-Tuning Open-Source Models Made Sense

That question pushed us toward open-source models.

Fine-tuning offered a compelling alternative:

- Predictable costs — infrastructure scales more linearly than token billing

- Data stays inside our system — no external API calls with sensitive payloads

- Control — over model behavior, updates, and failures

- Regulatory alignment — easier to comply with local data residency laws

Of course, this wasn’t a silver bullet.

Fine-tuning shifts complexity from “someone else’s API” to your own engineering stack.

And that’s where the real challenge began.

From “We Decide” to “I Experiment”

Strategies are decided by teams, but code is written by individuals.

Once we committed to this path, someone had to figure out if it was actually clear. That’s where I stepped in.

I needed to validate that we could actually execute this without building a massive platform team first.

Fine-Tuning Is the Easy Part

Training a model is surprisingly straightforward today:

- Pick a base model

- Prepare data

- Run a training script

What’s hard is everything around it:

- Reproducible environments

- Tracking experiments

- Managing GPU dependencies

- Serving models safely

- Rolling back when things break

I quickly realized I wasn’t just learning fine-tuning.

I was learning MLOps.

Why I Started Local-First

Before thinking about cloud deployments or Kubernetes clusters, I wanted answers to simpler questions:

- Can I train and serve models reliably on my own machine?

- Can I reproduce results days later?

- Can I track experiments without duct tape?

That led me to build a Docker-based, local-first MLOps setup—the foundation for everything that followed.

What This Series Is About

This blog series documents that journey.

Not from the perspective of a large ML platform team—but from a builder trying to ship a real product responsibly.

In the next posts, I’ll cover:

- Building a local MLOps stack with Docker

- Tracking experiments with MLflow

- Serving fine-tuned models securely

- Balancing cost, performance, and privacy

All the code and examples come from real work, not toy demos.

What’s Next

In the next post, I’ll talk about why fine-tuning alone isn’t enough, and how environment issues, dependency drift, and GPU setup almost derailed the entire effort.

If you’re building AI-powered products and wrestling with similar tradeoffs, I hope this series saves you some time—and a few painful mistakes.

Let’s build this the right way.